8 Years Later: Does the GeForce GTX 580 Still Have Game in 2018?

Today we're turning our clocks all the way back to November 2010 to revisit the once mighty GeForce GTX 580, a card that marked the transition from 55nm to 40nm for Nvidia's high-end GPUs and helped the company sweep its Fermi-based GeForce GTX 480 under the rug.

If you recall, the GTX 480 and 470 (GF100) launched just eight months before the GTX 580 in March 2010, giving gamers an opportunity to buy a $500 graphics card that could double as a George Foreman grill. With a TDP of 250 watts, the GTX 480 didn't raise too many eyebrows and around then AMD's much smaller Radeon HD 5870 was rated at 228 watts while its dual-GPU 5970 smoked most power supplies with its 294 watt rating.

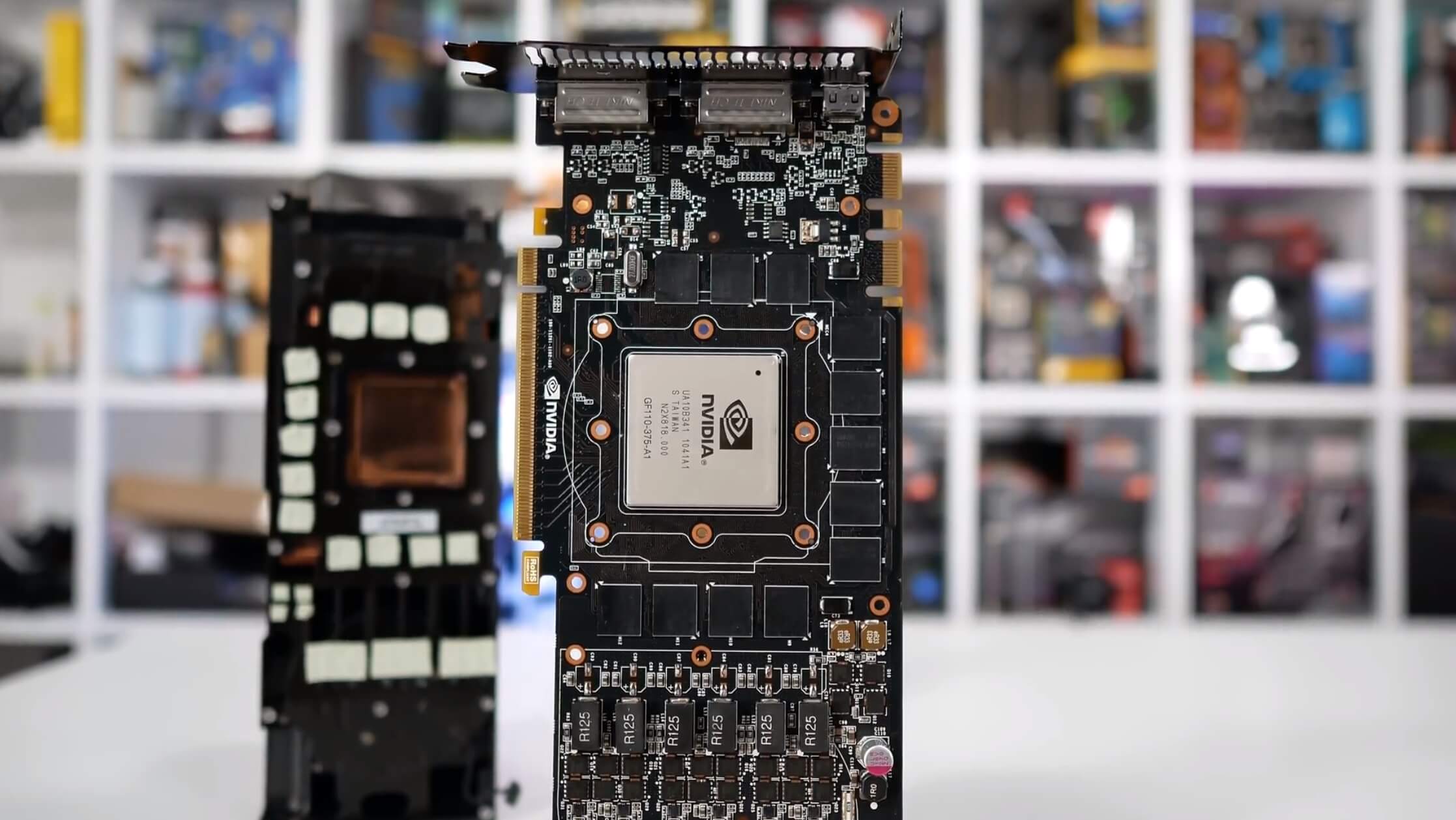

Although both the Radeon HD 5870 and GeForce GTX 480 were built on the same 40nm manufacturing process, the GeForce was massive. Its die was over 50% larger. Nvidia aimed to create a GPU that packed both strong gaming and strong compute performance, resulting in the GF100 die measuring an insane 529mm2.

This behemoth of a die caused problems for TSMC's 40nm process which was still maturing at the time, which resulted in poor yields and leaky transistors. For gamers, the GTX 480 was simply too hot to handle – you couldn't touch it even 10 minutes after shutting down – and it was far from quiet as well.

Fermi went down as one of Nvidia's worst creations in history and at the time the company knew it had a real stinker on its hands so it went back to the drawing board and returned later that year to relaunch Fermi as the GTX 580. By November, TSMC had ironed out the kinks for their 40nm process and we got 7% more cores in a slightly smaller die. Of course, it wasn't just TSMC that had time to work things out, as Nvidia also fixed what was wrong with GF100 when it created GF110 (the Fermi refresh).

Based on our own testing from 2010, power consumption was reduced by at least 5% and reference card load temperatures dropped 15%. Making those results more impressive, the GTX 580 was ~25% faster than the 480.

That's a significant performance boost in the same release year and on the same process, while also saving power. The GTX 480 truly sucked. I didn't like it on arrival but it wasn't until the 580 came along eight months later that I understood just how rubbish it really was. I concluded my GTX 580 coverage by saying the following...

"The GeForce GTX 580 is unquestionably the fastest single-GPU graphics card available today, representing a better value than any other high-end video card. However, it's not the only option for those looking to spend $500. Dual Radeon HD 6870s remain very attractive at just under $500 and deliver more performance than a single GeForce GTX 580 in most titles. However, multi-GPU technology isn't without pitfalls, and given the choice we would always opt for a single high-end graphics card.

Nvidia's GeForce GTX 580 may be the current king of the hill, but this could all change next month when AMD launches their new Radeon HD 6900 series. AMD was originally expected to deliver its Cayman XT and Pro-based Radeon HD 6970 and 6950 graphics cards sometime during November, but they have postponed their arrival until mid-December for undisclosed reasons. If you don't mind holding off a few short weeks, the wait could be worth some savings or potentially more performance for the same dollars depending on what AMD has reserved for us."

For those of you wondering, the Radeon HD 6970 turned out to be a bit of a disappointment, falling well short of the GTX 580 and as a result was priced to compete with the GTX 570, allowing Nvidia to hold the performance crown without dispute for the next few years. It would be interesting to see how those two stack up today, perhaps that's something we can look at in the future.

So, we've established that the GTX 580 was able to save face for Nvidia in 2010 and retain the performance crown for a few years before 28nm GPUs arrived in 2012, reigniting the graphics war once more. What we're looking to see now is just how well the tubby 520mm2 die performs today.

Can the GTX 580 and its 512 CUDA cores clocked at 772MHz handle modern games? Sure, it has GDDR5 memory on a rather wide 384-bit bus pumping out an impressive 192GB, but there's only 1.5GB of it, at least on most models. There were 3GB variants floating around, but most people picked up the original 1.5GB version.

To see how it handles I'm going to compare it with the Radeon HD 7950 along with the more recently released GeForce GTX 1050, 1050 Ti and Radeon RX 560. Let's check out those results before taking a gander at some actual gameplay.

Benchmarks

Battlefield 1, Dawn of War III, DiRT 4, For Honor

First up we have the Battlefield 1 results using the ultra quality preset, which as it turns out is a bad choice for the GTX 580 and its measly 1.5GB frame buffer. Don't worry though, after we go over all the graphs I'll show some gameplay performance using more agreeable settings. For now, we can see that the 580 simply isn't cutting it here and fails to deliver what I would call playable performance at a little over 30% slower than the Radeon HD 7950.

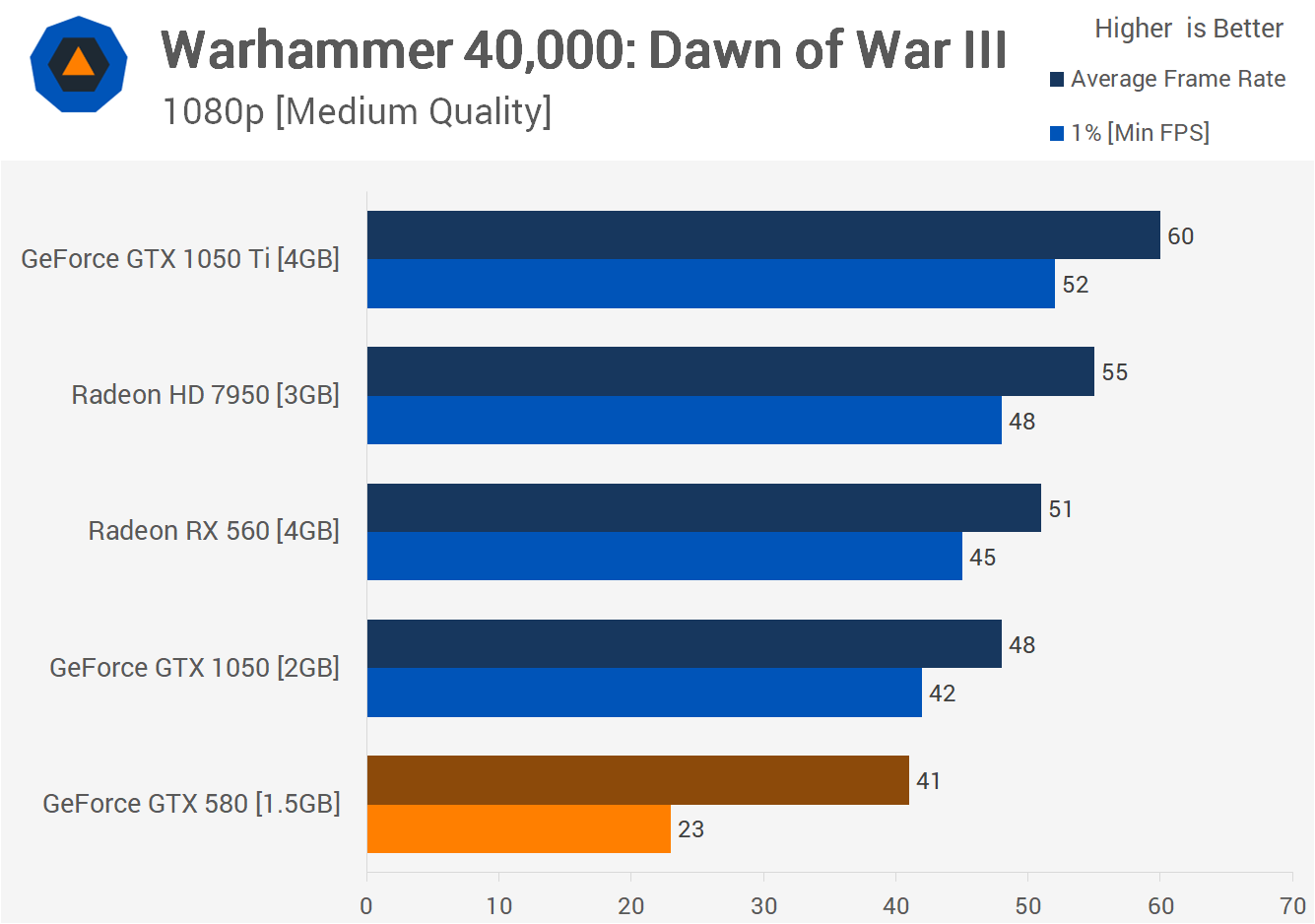

Next up we have Dawn of War III, which was tested using the more appropriate medium quality preset. Here we see a reasonable 41fps on average, but again, the GTX 580 is haunted by that limited VRAM buffer as the minimum frame rate drops down to just 23fps. This meant stuttering was an issue.

Dirt 4 was also tested using the medium quality settings and here the experience was quite good with the GTX 580, certainly playable and for the most part was very smooth at 1080p.

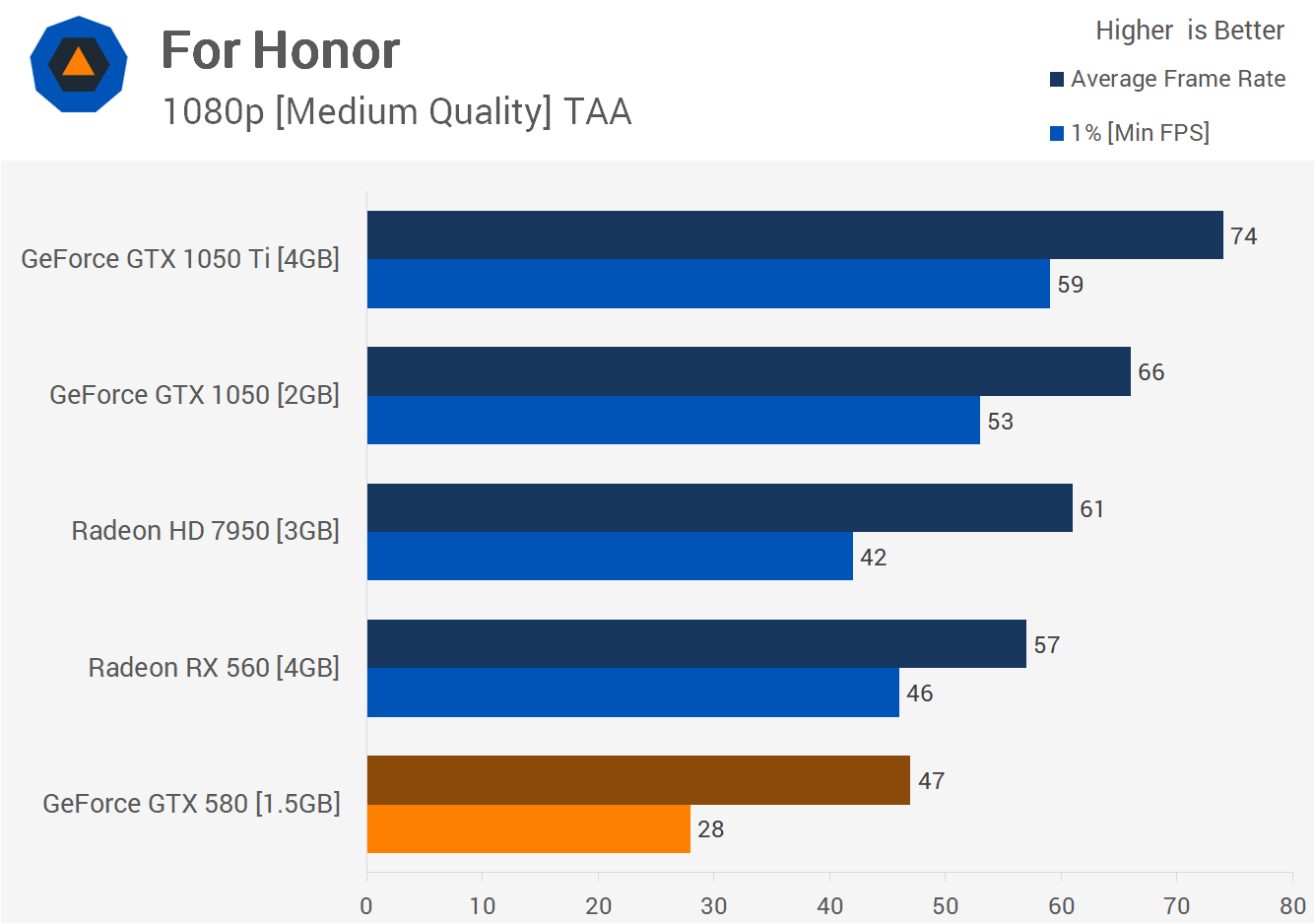

Moving on we have For Honor and here we found a mostly playable experience if that makes sense. At times we dropped down below 30fps and this made gameplay noticeably more choppy than it was on say the RX 560 or HD 7950. It's also interesting to note that the GTX 1050 absolutely obliterates 2010's flagship part.

友链

外链

互链

Copyright © 2023 Powered by

8 Years Later: Does the GeForce GTX 580 Still Have Game in 2018?-拍板定案网

sitemap

文章

886

浏览

71489

获赞

7132

热门推荐

Donald Trump is back on Twitch after a short ban for ‘hateful conduct’

How long will you get banned from Twitch for disparaging immigrants on multiple livestreams?The answHow queer clubs are handling the first pandemic Pride

Mashable is celebrating Pride Month by exploring the modern LGBTQ world, from the people who make up*That* Chadwick Boseman tweet is now Twitter's 'most Liked' ever

On Friday evening, Aug. 29, 2020, the people of Twitter received the devastating news of Chadwick Bo'Mythbusters' robotics genius Grant Imahara has died

Friends, fans, and coworkers are paying tribute to beloved Mythbustersalumni Grant Imahara, who diedYumi baby food subscription aims to disrupt a parenting industry

Welcome toSmall Humans, an ongoing series at Mashable that looks at how to take care of – andAmazon gets serious about self

Amazon, like Waymo, Uber, and Tesla, appears to want to build fleets of autonomous cars. The retailSkullcandy Push Ultra earbuds review: Decent to use and hard to lose

Whether you're on a campus or stuck at home doing remote learning this fall, you need a pair of earbWe've been working from home for 5 months. Here's what we learned.

Like many, many others around the world, we've been working from home for close to six months here aHere's what it looks like when a rescue dog saves someone buried in snow

This isOne Good Thing, a weekly column where we tell you about one of the few nice things that happeSurreal views of popular destinations emptied by the pandemic

With coronavirus cases surging in some parts of the United States, institutions like the NBA and DisTesla nabs $65 million tax break to build Cybertruck factory in Austin

Tesla is officially moving into Austin's Silicon Hills. Following a months-long search for a CybertrChris Evans' 'Guard That Pussy' photo is much better than a dick pic

In an accidental Instagram post, actor Chris Evans shared an important public service announcement wGoogle's Pixel 4a may have been delayed yet again

Google's cheaper Pixel phone is coming a bit later than originally anticipated. According to leakerTheirTube shows how YouTube's algorithm creates conspiracy theorists

Ever wonder how your dear Aunt Karen got radicalized into believing the bizarre conspiracy theoriesEpic Games says Apple is threatening its access to developer tools

Epic Games wants you to know that Apple isn't playing nice. The gaming giant behind Fortnitecried fo

科技创新!

科技创新!